Introduction: Curiosity and a Challenge

As a professional mobile app developer, I spend my days building high-quality applications for clients across industries. But late at night—once the house is quiet and the kids are asleep—I get to explore.

Recently, I gave myself a challenge:

Can AI actually build an entire mobile app?

Could I go from idea to app store using AI tools, primarily Cursor, to write the code? Could it be useful or would it fall apart?

And the question running in the back of my mind the whole time:

Will AI take my job?

So, I built a Crypto Quiz trivia app in just 2 months of spare-time effort.

This is how it went—from first idea to live store listings, with all the lessons (and laughs) in between.

Idea to Action

I didn’t start with a formal business case. I didn’t validate anything.

This was about process, not product. I wanted to see how far AI could take me.

The idea? A simple trivia app. The subject matter—cryptocurrency—came from my business partner.

The goal? To see how AI-generated code would stand up in a real mobile app project.

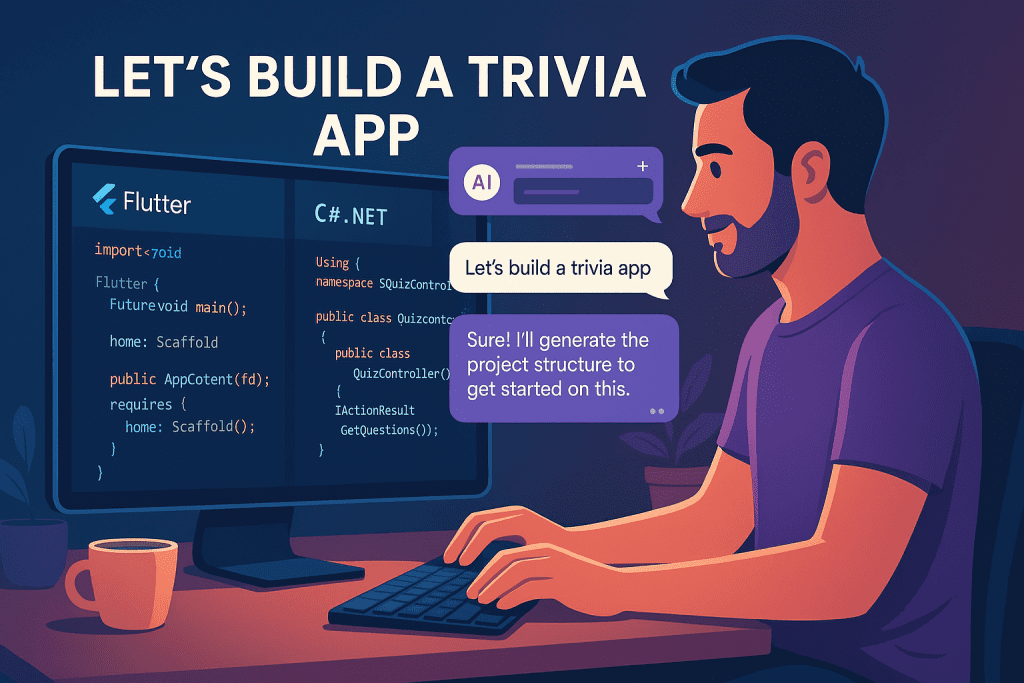

Frontend: Flutter (targeting iOS, Android, Web, Desktop)

Backend: .NET Core Web API

Architecture: Thin client, all data (questions, scoring, etc.) fetched from the server

No planning. No feature list. Just a rough outline.

And that’s when the chaos (and fun) began.

Letting AI Loose

I opened up Cursor (an AI-powered fork of VS Code) and gave it my first instruction:

“Let’s build a crypto quiz app.”

From there, it went wild.

Generated entire frontend UI in Flutter, with dark mode.

Wrote backend services in .NET Core.

Invented multiplayer support (which I didn’t ask for).

Created a scoring system, and even started writing database scripts.

That’s right—it built so much code, I had to ask it to stop.

It reminded me of a first-year uni student discovering recursion: smart, enthusiastic, but lacking discipline or context.

AI didn’t ask questions.

It didn’t verify assumptions.

It just did things.

And half the time, I was pulling it back into reality. It generated unused code. Broke working code. Rewrote things that were nearly right and made them worse.

Still, there were moments of brilliance…

…and those kept me going.

The Power (and Limits) of Cursor

Cursor was the hero tool in this journey. For anyone unfamiliar, it’s an AI-first IDE based on VS Code—supercharged with in-editor codegen, refactors, and chat.

What I loved:

No more copy-pasting from ChatGPT — it writes directly into your files.

It could see the whole codebase in a shared folder, making it easier to align frontend/backend models.

It made me feel like I had a coding assistant on tap.

What it couldn’t do:

Replace Rider or Android Studio for debugging (yet).

Match the structure and discipline that years of professional experience brings.

Old habits die hard, so I still leaned on my usual IDEs to make sure things actually ran smoothly. But Cursor became my go-to brainstorming companion.

Architecture Decisions (That AI Didn’t Make)

One thing quickly became clear: AI doesn’t architect. Developers do.

It never asked the kind of questions a teammate would:

Should this be stored in JSON or a database?

Should we split widgets?

Should we cache this on the client?

Do you want ads or in-app purchases?

So I stepped in:

Reined in multiplayer

Ditch the database for quiz questions (static JSON made more sense)

Decided to use Firebase for Crashlytics and AdMob integration

Monetization wasn’t the goal, but I’d never integrated AdMob before—so why not learn?

The key was always me making the big decisions.

AI was like a junior dev: smart, helpful, but directionless without a lead.

Time Management in the Real World

This wasn’t a 9-to-5 project.

I had a full-time job, client projects, and parenting duties.

Weeknights: ~2 hours

Weekends: 4–6 hours, if the kids cooperated

Most sessions were more like interactions than deep focus work. I’d feed AI a prompt, review the code, correct its course, and repeat.

Because the AI could do so much heavy lifting, it felt more productive than it probably was.

It also made the process more entertaining. Sometimes I laughed. Sometimes I swore at my screen. But it always felt like progress.

The Hardest Part? The UI

AI doesn’t understand aesthetics. It doesn’t use the apps it builds.

The UX was… meh.

Functional, but not fun.

Here’s where I struggled:

Layouts that looked awkward or crowded

Widgets with missing logic

State management issues that caused crashes

AI didn’t care that the back button didn’t make sense, or that a screen was ugly.

It just did what it was told—and sometimes too much.

In the end, I had to:

Delete entire sections of AI-generated code

Restructure UI flows

Add polish that only a human would know to add

Testing and Polishing

There was no test plan. I didn’t write unit tests.

This was manual, messy testing on real devices and emulators.

Eventually I got it stable enough to be proud of an MVP (minimum viable product).

But even then, AI would frustrate me—just when a fix worked, it would suggest rewriting the whole block again and break it.

That’s when the Good Will Hunting quote hit me:

“You’ve never held your best friend’s head in your lap and watched him gasp his last breath looking to you for help.”

AI had read all the books. But it hadn’t lived the code. It doesn’t have the responsibility for what it’s generating.

Submission to App Stores

This part I did manually—intentionally.

You don’t want a robot hitting “submit” to the App Store.

Human QA matters.

I:

Took screenshots

Wrote copy (with AI’s help)

Uploaded the build to Google Play and Apple App Store

Both were approved within a week.

No rejections. A smooth process, thanks to 15+ years of mobile dev experience.

One crash report came in (x64 processor issue on Android).

I fixed it. Released a patch. That’s just part of the lifecycle.

After Launch

Downloads to date: ~20

Revenue from ads: 9 cents

Emotional ROI: Priceless.

I don’t consider it a failure.

This wasn’t about making money. It was about exploring a workflow.

Could it be better? Absolutely.

The UI needs work.

The gameplay could be more fun.

It needs gamification, animation, and a reason to come back.

So… do I market it?

Maybe. But I think I want to polish it more first.

Lessons Learned

Here’s what I took away from the experiment:

AI gets code written faster, not better.

AI can’t replace architecture, UX, or long-term thinking.

It’s great for tricky little problems (SQL, edge-case bugs, boilerplate).

But it still needs a human driver.

I’ve started writing documentation that defines game mechanics and coding standards, so AI has something to reference next time.

It’s not about firing developers.

It’s about giving them better tools.

AI Pro Tip — Use Cursor

If you’re a developer, do yourself a favour:

Try Cursor.

It’s a fork of Visual Studio Code built with AI-first development in mind.

Cursor lets you:

Chat directly with the code

Insert code suggestions inline

Understand your whole repo contextually

I used Claude 3.5 inside Cursor, but it works with GPT-4 and others.

It reduced friction, boosted speed, and gave me insights I didn’t expect.

Cursor didn’t replace me.

But it made me faster, more creative, and (sometimes) lazier in the best possible way.

At Australian App Development, we work at the bleeding edge of AI, mobile apps, and cloud tech.

If you’re wrestling with how to:

Integrate AI into your development team

Fix a messy AI-generated codebase

Build scalable mobile apps in Flutter, Xamarin, or React Native

Or just want experts who understand modern development inside-out

Let’s chat.

We’re real people who know how to use AI responsibly, and we’re not afraid to dig into the details.

👉 Click the Chat Now button and tell us about your idea.

We’ll help you turn AI into a tool—not a trap.